In the realm of Industrial IoT (IIoT), edge computing is undergoing a transformation driven by Small Language Models (SLMs). At ALTEN International Tech Week’s " Automation Across Borders " event, solutions engineer Salman Sattar revealed how SLMs, with their lightweight architectures, are reshaping industrial automation in demanding environments requiring real-time decision-making.

Symbiotic Relationship Between Edge Devices and SLMs

Industrial edge devices, serving as the "data first responders" of automation systems, are equipped with sensors and real-time data acquisition capabilities. Traditionally reliant on cloud-based decision-making, this model faces challenges in low-latency scenarios such as maritime shipping and autonomous navigation. The introduction of SLMs has revolutionized this paradigm:

01 Parameter Scale

SLMs require only millions to billions of parameters (vs. trillions for LLMs) and can run on edge devices like Raspberry Pi.

02 Core Advantages

- 85% reduction in energy consumption (compared to equivalent LLMs).

- 3–5x faster inference speed.

- Offline operation support.

03 Three Technical Pillars

Knowledge Distillation: Extracts knowledge representations and output distributions from high-parameter teacher models via a teacher-student framework, transferring them to lightweight student models.

Pruning: Removes redundant neural network connections or neurons based on weight importance assessments, achieving model sparsity.

Quantization: Compresses model parameters and computations from 32-bit floating-point (FP32) to 8-bit integers (INT8), reducing computational resource consumption through low-bitwidth representation.

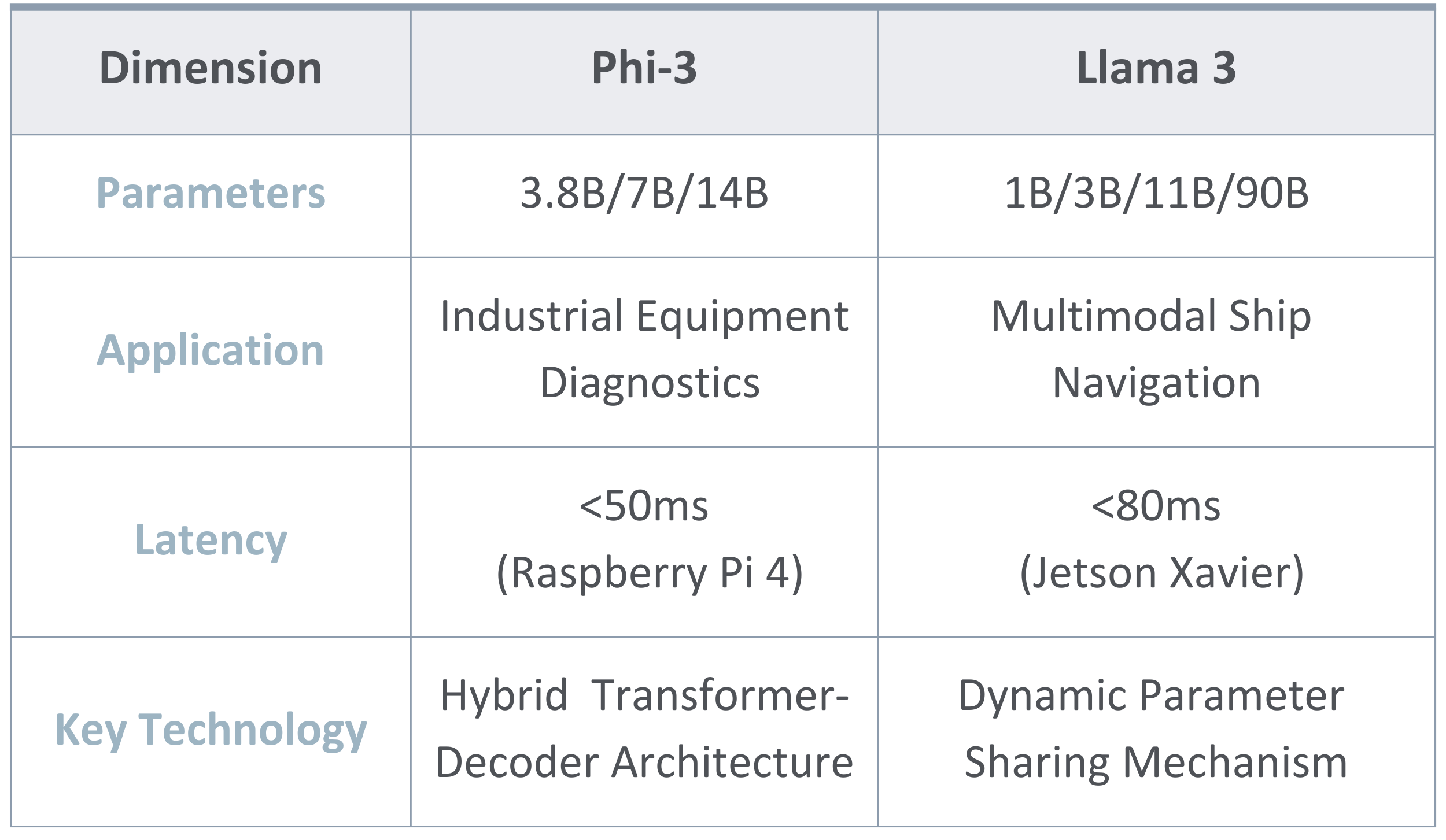

Practical Comparison: Microsoft Phi-3 vs. Llama 3 (Meta)

Revolutionary Case Study:

Autonomous Energy Optimization in Shipping

In Arora Shipping Company’s implementation, an SLM system achieved breakthroughs through a three-tier architecture:

01 Edge Layer

AWS IoT Greengrass devices deployed on generators and refrigeration units.

Real-time processing of ocean conditions (wave height, wind speed) and cargo types (refrigerated/vehicles).

02 Decision Layer

Local SLM dynamically adjusts ship configurations (hull design, load capacity):

✓ Power distribution algorithm error rate <2%

✓ 18% improvement in fuel efficiency.

03 Cloud Coordination

Voyage data uploaded via Amazon Bedrock agents during port calls.

SageMaker generates customized models for Arctic/tropical routes.

In-Depth Technical Architecture

Physical Environment Layer: Industrial equipment (vibration sensors, fuel flow meters).

Edge Device Layer: Embedded systems running quantized SLMs.

Software Processing Layer: Millisecond-level responses via MQTT/UDP protocols.

Cloud Gateway Layer: OTA model updates through Lambda functions.

Future Outlook

Salman predicted three key trends:

Hybrid Inference: Collaborative computing frameworks combining LLMs (cloud) + SLMs (edge).

Neural Compression: 1B-parameter models achieving 3B-level accuracy.

Domain-Specific Chips: SLM accelerators with Tesla Dojo-like architectures.

“SLMs are not simplified versions of LLMs but new entities reborn for industrial scenarios—they act like seasoned chief engineers, making instinctive yet precise decisions during storms.”

—— Salman Sattar

This technological evolution is setting new industry standards: ABI Research predicts that by 2027, 65% of industrial edge devices will have built-in SLM capabilities, with the shipping industry alone projected to generate annual savings of $27 billion through fuel optimization. For engineers, mastering SLM domain customization (e.g., LoRA fine-tuning) will become a core competency in the Industry 4.0 era.